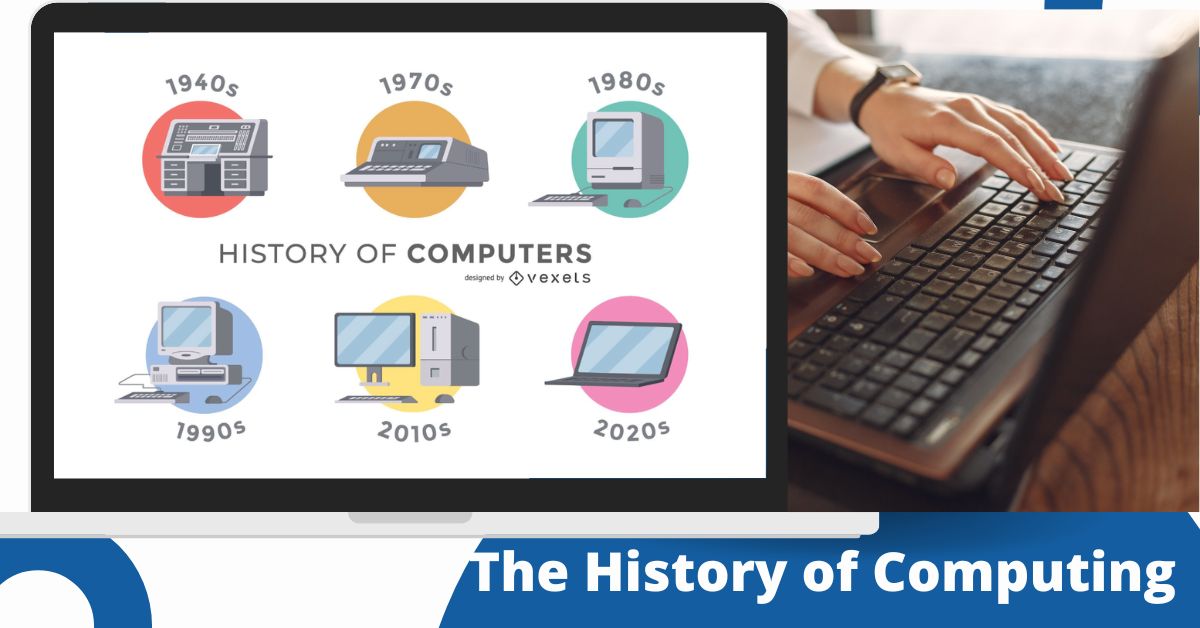

The history of computing goes back centuries in such a way as to depict innovation, creativity, and the solving of different problems that aim at assisting humanity to achieve more efficiency and capability. It started with mechanical devices and later matured into artificial intelligence. What is so refreshing about the history of computing is its timeline of ingenuity and technological breakthroughs.

Early Beginnings

Humans did depend on the most basic tools before the advent of the mechanical and electronic computer. One of the earliest known devices is the abacus, which is a beaded device that dates back to ancient times and early times in China when it was said to have come from Mesopotamia. It was used for teaching basic arithmetic among traders and mathematicians.

Others are analog systems such as the Antikythera mechanism. This was a Greek device used for predicting certain astronomical events. Such systems were primitive from today’s standpoint but formed the basis of structured problem-solving and logical thinking in computing.

Mechanical Era

The most important step for progress in the history of computing came with the mechanical era. Charles Babbage has also been called the “Father of Computing.” In this respect, he conceived the Difference Engine but then devised the Analytical Engine, which was a mechanical general-purpose computing device that could do computations and automated arithmetic automatically.

The term developer is applied to the first programmer who was, in fact, Ada Lovelace – a mathematician and collaborator of Babbage. She was successful in designing algorithms for the Analytical Engine. She realized that this machine could do much more than compute numbers; she even thought of ideas that were similar to the modern concept of computing.

Electromechanical Computers

The mid-20th century used electrical signals for calculation in an electromechanical computing system. British mathematician Alan Turing designed the Bombe, crucial for decrypting codes used by Nazi forces during World War II.

Early Computing in America: The first ways to compute were developed in the United States, by systems like ENIAC and Harvard Mark I: These vacuum tube and relay-powered machines allowed for calculations at speeds never before seen but were gigantic in size and narrow in focus.

The Emergence of Digital Computers

The development of the transistor in 1947 revolutionized computing. It was smaller, faster, and more reliable than vacuum tubes, making possible compact digital systems. Early programming languages such as FORTRAN and COBOL enabled programmers to write instructions for computers in structured forms.

Computing technology started to penetrate the scientific and commercial industries during the 1960s and eventually laid the basis for the mainframe era.

The Mainframe Era

It was the heyday of mainframe computers and that lasted well into the 1970s. Large business computing was available as developments from IBM, for example. Big machines made possible big data processing for big businesses, and though expensive and requiring a dedicated environment, cemented computing into the corporate mainstream.

The coming of the 1980s brought personal computing, which transformed technology from its industrial use to a completely domestic necessity. A Steve Wozniak-designed Apple II and IBM’s entry into the PC market placed computing into people’s and small business’s hands.

The massification of technology led to the acceleration of the appearance of software development. Companies like Microsoft came forward offering not only operating systems but also applications specifically tailored towards personal use.

Networks and the Internet

ARPANET was designed at the end of the 1960s. The invention of the protocols called TCP/IP enabled the free exchange of data between connected systems. Eventually, through the invention of the World Wide Web by Tim Berners-Lee in 1989, global communication has now transformed to ease access and sharing of information worldwide.

Contemporary Computing

Modern-day computing is about lightning-fast innovation in cloud technology, artificial intelligence, and mobile platforms. Cloud computing has changed the game of storing and accessing data while being able to apply systems like machine learning algorithms that solve complex health care, finance, and logistics problems.

Impact on Society

Computing has transformed the way people interact and businesses operate. While staying at home or working in the field, using Facebook and other social media sites to solve the problems at hand, the face of communication as well as collaboration has dramatically changed. Computational tools have also led to a ‘tremendous leap’ in health and manufacturing.

Trends in Computing for the Future

With breakthroughs promised through quantum computing, theoretically able to usher in large-scale problem-solving leveraging principles from the world of quantum mechanics, technology for sustainability is now at the forefront, as developers try to minimise adverse environmental effects created.

FAQ

What importance does an abacus hold in computing history?

An abacus is one of the very first tools used to carry out operations on simple arithmetic, hence one of the earliest devices, with which humanity expressed attempts toward some kind of structured problem-solving.

A real woman, Ada Lovelace first computer programmer working on some algorithms related to Charles Babbage’s Analytical Engine.

Transistors replaced vacuum tubes: This led to computers that were smaller, faster, and more reliable than before, developing modern digital systems.

ARPANET was involved in a key role for the start of the internet as we know it today.

ARPANET was a precursor to the internet, hence making it possible to enable connectivity between systems with early networking protocols.

What modern computing advancements have been impacted by AI?

AI has elevated the limits of computing abilities; in essence, it answers very complex problems in the industries of healthcare, finance, and logistics industries.

What are the benefits of quantum computing?

Quantum Computing offers solutions to problems no traditional computer can solve, mainly in cryptography, optimization, and scientific research.

Conclusion

The history of computing speaks volumes about the elasticity of humans. From stone tools and abacuses to computers and other cutting-edge technologies, the computing machine revolution can be transformed repeatedly in how we work, connect, and live. As the field is advancing, it holds limitless possibilities with greater innovations in the future.